Adversarial amplitude swap towards robust image classifiers

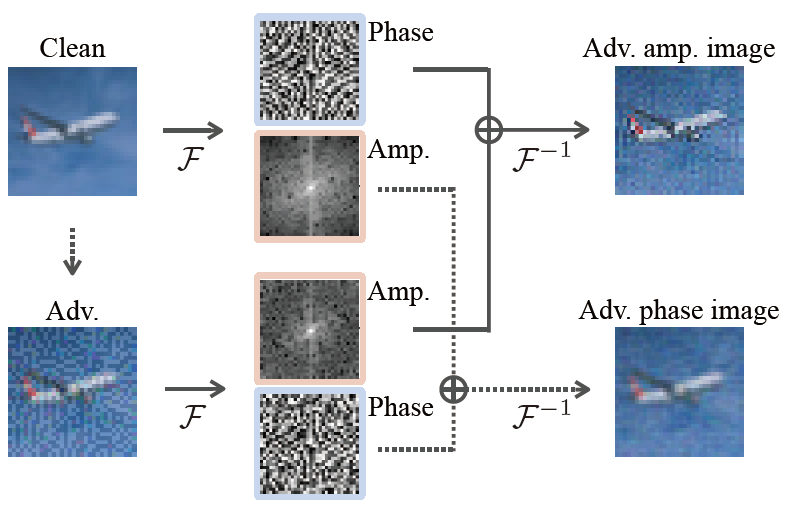

The vulnerability of convolutional neural networks (CNNs) to image perturbations such as common corruptions and adversarial perturbations has recently been investigated from the perspective of frequency. In this study, we investigate the effect of the amplitude and phase spectra of adversarial images on the robustness of CNN classifiers. Extensive experiments revealed that the images generated by combining the amplitude spectrum of adversarial images and the phase spectrum of clean images accommodates moderate and general perturbations, and training with these images equips a CNN classifier with more general robustness, performing well under both common corruptions and adversarial perturbations. We also found that two types of overfitting (catastrophic overfitting and robust overfitting) can be circumvented by the aforementioned spectrum recombination. We believe that these results contribute to the understanding and the training of truly robust classifiers.

Chun Yang Tan, Kazuhiko Kawamoto, Hiroshi Kera, Adversarial amplitude swap towards robust image classifiers, ECCV 2022 Workshop on Adversarial Robustness in the Real World, 2022 [web].

Chun Yang Tan, Kazuhiko Kawamoto, Hiroshi Kera, Adversarial amplitude swap towards robust image classifiers, arXiv:2203.07138, 2022 [arXiv].