Game-Theoretic Approach to Explainable AI

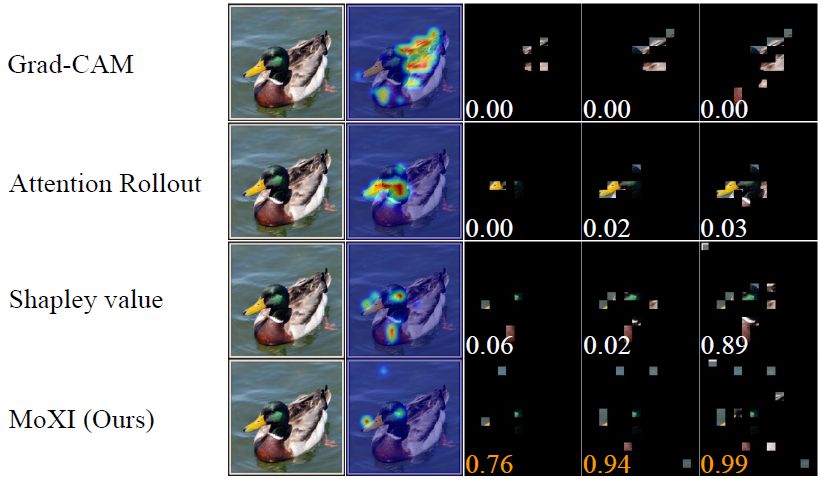

Identifying Important Group of Pixels using Interactions

To better understand the behavior of image classifiers, it is useful to visualize the contribution of individual pixels to the model prediction. In this study, we propose a method, MoXI~(Model eXplanation by Interactions), that efficiently and accurately identifies a group of pixels with high prediction confidence. The proposed method employs game-theoretic concepts, Shapley values and interactions, taking into account the effects of individual pixels and the cooperative influence of pixels on model confidence. Theoretical analysis and experiments demonstrate that our method better identifies the pixels that are highly contributing to the model outputs than widely-used visualization methods using Grad-CAM, Attention rollout, and Shapley value. While prior studies have suffered from the exponential computational cost in the computation of Shapley value and interactions, we show that this can be reduced to linear cost for our task.

Kosuke Sumiyasu, Kazuhiko Kawamoto, and Hiroshi Kera, Identifying Important Group of Pixels using Interactions, CVPR, pp. 6017-6026, 2024 [paper][arXiv][GitHub].

Kosuke Sumiyasu, Kazuhiko Kawamoto, Hiroshi Kera, Identifying Important Group of Pixels using Interactions, ICCV 2023 Workshop on Uncertainty Quantification for Computer Vision, 2023 [web].

Kosuke Sumiyasu, Kazuhiko Kawamoto, Hiroshi Kera, Identifying Important Group of Pixels using Interactions, arXiv:2401.03785, 2024 [arXiv].

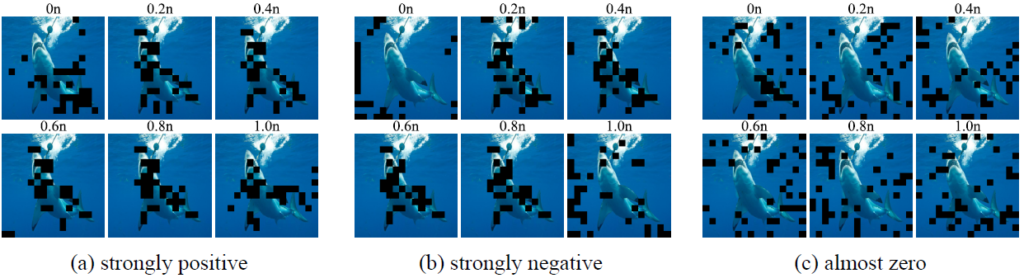

Game-Theoretic Understanding of Misclassification in Deep Learning

This study analyzes various types of image misclassification from a game-theoretic view. Particularly, we consider the misclassification of clean, adversarial, and corrupted images and characterize it through the distribution of multi-order interactions. We discover that the distribution of multi-order interactions varies across the types of misclassification. For example, misclassified adversarial images have a higher strength of high-order interactions than correctly classified clean images, which indicates that adversarial perturbations create spurious features that arise from complex cooperation between pixels. By contrast, misclassified corrupted images have a lower strength of low-order interactions than correctly classified clean images, which indicates that corruptions break the local cooperation between pixels. We also provide the first analysis of Vision Transformers using interactions. We found that Vision Transformers show a different tendency in the distribution of interactions from that in CNNs, and this implies that they exploit the features that CNNs do not use for the prediction. Our study demonstrates that the recent game-theoretic analysis of deep learning models can be broadened to analyze various malfunctions of deep learning models including Vision Transformers by using the distribution, order, and sign of interactions.

Kosuke Sumiyasu, Kazuhiko Kawamoto, Hiroshi Kera, Game-Theoretic Understanding of Misclassification, arXiv:2210.03349, 2022 [arXiv].